High Bandwidth Memory, commonly known as HBM, is a form of RAM designed to handle the increasing demands for higher data transfer rates in modern computing. Unlike traditional memory layouts, HBM stacks multiple layers of DRAM chips vertically, coupling them with a high-speed interface. This design greatly expands bandwidth and reduces power consumption.

The primary use of HBM is in areas that demand swift processing of large data volumes, such as in high-performance computing and advanced graphics rendering. Its ability to provide high data transfer speeds makes it ideal for graphics accelerators, network devices, and AI applications. HBM is particularly valuable in pushing the performance boundaries for data-intensive tasks and is becoming more prevalent in CPUs and FPGAs found in servers and supercomputers.

A Breakdown Of HBM

High Bandwidth Memory (HBM) is a type of computer memory that offers super-fast speeds and low energy use. It’s perfect for tasks that need to move a lot of data quickly. Here’s what it’s used for:

- Graphics Cards: The most common use. Graphics cards in powerful computers use HBM to render complex images and videos with lightning speed. This makes for smoother gaming experiences and faster video editing.

- Big Data and AI: Artificial intelligence and machine learning chew through mountains of data. HBM speeds up this process, making it possible to train AI models faster and analyze huge datasets in record time.

- High-Performance Computing: Scientists and engineers use supercomputers for complex things like weather simulations and designing new materials. HBM gives these systems the memory speed they need to keep up.

- Networking: Network devices like routers and switches need to shuffle a lot of traffic. HBM helps them do this without creating lags or bottlenecks.

Here’s a table summarizing how HBM compares to regular computer memory (DRAM):

| Feature | HBM | DRAM |

|---|---|---|

| Bandwidth (speed) | Much higher | Lower |

| Power consumption | Lower | Higher |

| Cost | More expensive | Less expensive |

| Size | Smaller footprint | Larger footprint |

Key Takeaways

- HBM is an advanced RAM technology enhancing data transfer rates.

- This memory is used in high-performance computing and graphics.

- A vertical stacking design improves bandwidth and energy efficiency.

Technical Overview of High Bandwidth Memory

High Bandwidth Memory (HBM) serves as a high-speed, efficient method of handling data in modern electronics, including AI processing units and high-performance graphic cards.

Design and Architecture

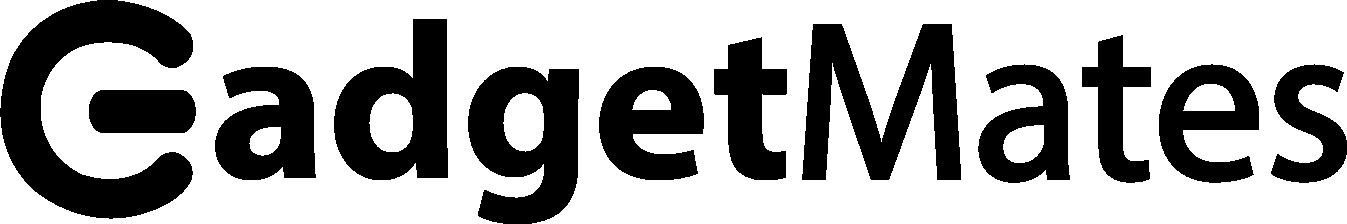

HBM harnesses the power of 3D-stacked DRAM chips connected through silicon interposers and Through-Silicon Vias (TSVs). This design allows for a footprint that is much smaller than traditional memory types. HBM uses 2.5D packaging, where DRAM chips stack on top of one another, integrating with an interposer for signal distribution.

- Interposer: A thin piece of silicon that interfaces between the stacked memory and the main circuit board, ensuring a high degree of bandwidth through its multiple connection points.

- TSVs: Vertical electrical connections passing through the silicon wafers, which allow the stacks of DRAM to communicate quickly and with less power than older designs.

Performance Metrics

Performance in memory technology is quantified by three metrics: transfer rates, bandwidth, and latency.

- Transfer Rate: Speed at which data is transferred, typically measured in gigabits per second (Gbps).

- Bandwidth: Total amount of data that can be transferred at one time across all channels, measured in gigabytes per second (GB/s).

- Latency: Delay before transfer begins, generally shorter is better to improve overall performance.

HBM provides significantly higher bandwidth when compared to older GDDR5 and DDR4 types due to more parallel channels to move data.

Evolution and Standards

HBM has evolved through several iterations, with HBM2, HBM2E, and HBMnext designed by standards group JEDEC. The development of these standards incorporates improvements in memory capacity and transfer rate. HBM2E, for instance, allows for greater storage compared to its antecedents, offering enhancements for high-performance computing needs.

- HBM2: Offers a significant upgrade in speed and energy efficiency over HBM.

- HBM3: Targets higher bandwidth and lower voltage operation, aiming at the latest high-performance applications.

- JEDEC Standards: Provide consistency and interoperability across different HBM technologies and manufacturers.

Applications and Use Cases

High Bandwidth Memory (HBM) is a key player in the advancement of various technologies. It serves to enhance performance while staying efficient in power use.

Computing and Processing

HBM finds extensive use in computing, where fast data processing is critical. CPUs and GPUs by AMD and Nvidia use HBM for improved data transfer rates, which is vital for Artificial Intelligence (AI), Machine Learning, and High Performance Computing (HPC). FPGAs and specialized AI ASICs also benefit from its high-speed memory interface, enabling quick handling of complex tasks.

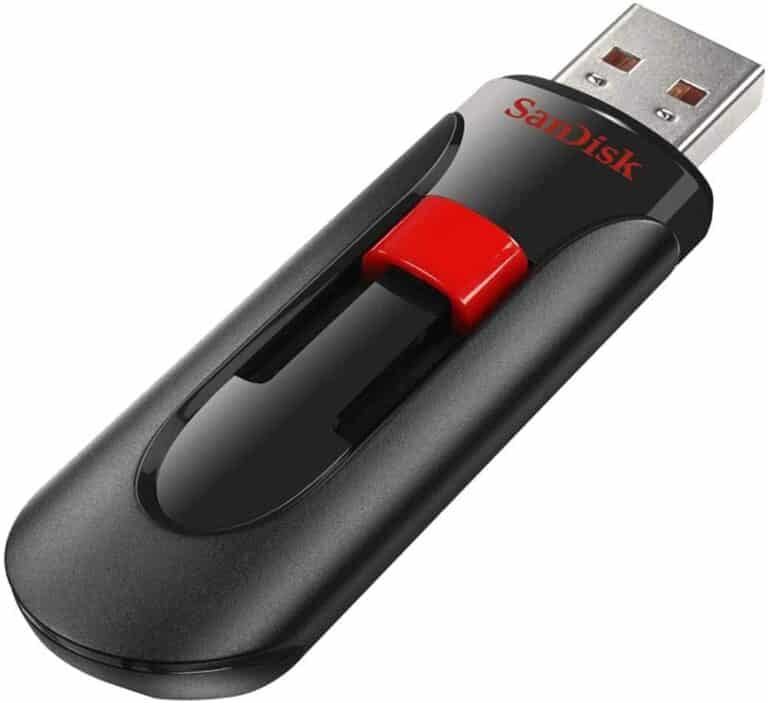

Storage and Networking

In the storage and networking field, HBM aids in managing the high data flow. It’s crucial for technologies like 5G where communication speed is paramount. The form factor and efficiency of HBM are beneficial for cloud storage and data centers, reducing the overall physical space for memory and enhancing energy savings.

Future Directions

Looking ahead, HBM is set to revolutionize areas such as automotive systems and vision tech, especially as System-on-Chip designs become more common. The growing demand for flash memory signals a broader adoption across different sectors, with Samsung, SK Hynix, and Micron leading in manufacturing advancements. Systems require memory that aligns with the speed of memory controllers and buses to avoid bottlenecks, and HBM is positioned to meet these needs.

Frequently Asked Questions

This section provides clear answers to common questions about high bandwidth memory and its uses.

What applications benefit most from using high bandwidth memory?

Applications that require fast data processing like artificial intelligence, data analytics, and professional graphics work see a significant boost from high bandwidth memory. This memory allows quicker data transfer rates, which is crucial for these tasks.

How does HBM differ from traditional memory architectures?

High bandwidth memory provides higher data transfer speeds and bandwidth by stacking memory chips on top of one another, which traditional memory like DDR does not. HBM uses less power and takes up less space compared to older architectures.

Can high bandwidth memory improve gaming performance?

Yes, high bandwidth memory can enhance gaming performance by allowing faster access to graphics data. This results in smoother, more detailed visuals in games, particularly those with high-resolution textures and complex environments.

What are the advantages of using HBM over GDDR5 in graphics cards?

HBM outperforms GDDR5 in terms of bandwidth and energy efficiency. Graphics cards with HBM can handle more data at once and use less power, making them preferable for high-end gaming and graphic-intensive applications.

How do HBM and HBM2 compare in terms of performance?

HBM2 takes the capabilities of HBM further, offering double the bandwidth, more memory capacity and enhanced power efficiency. These improvements make HBM2 a better choice for very demanding computing tasks.

In what scenarios is HBM3 preferred over its predecessors?

HBM3 is chosen over earlier versions when the highest memory bandwidth and capacity are needed. It suits applications in supercomputing and advanced graphics where every bit of performance makes a difference and justifies the cost.